Where should your data storage be placed: inside the server or out on the storage area network (SAN)?

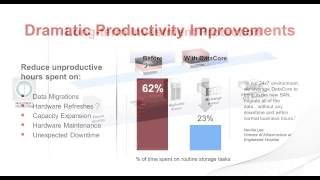

DataCore recently announced SANsymphony-V10, the company’s 10th generation software platform deployed at over 10,000 customer sites around the world. The key problem that we looked to addressed in this release was not fully covered in the press which tends to focus on specific features. Instead, the main aim of this release was to deal with the problem of separate and isolated storage islands caused by the incompatibilities of different storage vendor offerings and the use of Flash which has re-driven the need for server-side storage. This and the diversity of new technologies has disrupted the storage market. Today, the storage world consists of a spectrum of storage approaches and devices and what is needed is unification and common management that can work across different vendor platforms and technologies. This is one of the key challenges that true software-defined storage architectures must resolve.

What is needed is a solution that transcends across all the different platforms? A platform that provides end-to-end storage services which can reconcile Virtual SANs, Converged Appliances, Flash Devices, Physical SANs, Networked and Cloud Storage from Becoming ‘Isolated Storage Islands’.

IDC's Nick Sundby states the problem in the following release as follows:

“It’s easy to see how IT organizations responding to specific projects could find themselves with several disjointed software stacks – one for virtual SANs for each server hypervisor and another set of stacks from each of their flash suppliers, which further complicates the handful of embedded stacks in each of their SAN arrays,” said IDC’s consulting director for storage, Nick Sundby. “DataCore treats each of these scenarios as use cases under its one, unifying software-defined storage platform, aiming to drive management and functional convergence across the enterprise.”

Flash and new storage technologies are driving a ‘Rethink’ on how we deal with storage and its growth and diversity; storage is no longer just mechanical disk drives, it now encompasses a range of devices from Flash-memory to, Virtual SANs, to SANs to Cloud Storage.

The following article overviews how DataCore is tackling the issue of 'isolated storage islands':

But, let’s examine further the issue of data storage placement which with the advent of Flash technologies has become a major question.

DataCore's Augie Gonzalez recently wrote an interesting piece ‘Which side are you on?’ , which covers the trade-offs of server-side or SAN-side, the article appears below:

Augie asks us to considers both sides of the storage placement argument and concludes that maybe we don't have to take sides at all.

There is a debate raging as to where data storage should be placed: inside the server or out on the storage area network (SAN). The split between the opposing views of the network grows wider each day. The controversy has raised concerns among the big storage manufacturers, and will certainly have huge ripple effects on how you provision capacity going forward.

There is a debate raging as to where data storage should be placed: inside the server or out on the storage area network (SAN). The split between the opposing views of the network grows wider each day. The controversy has raised concerns among the big storage manufacturers, and will certainly have huge ripple effects on how you provision capacity going forward.

DAS BACK IN THE LIMELIGHT

25 years ago, SANs were a novelty. Disks primarily came bundled in application servers - what we call Direct Attached Storage (DAS) - reserved to each host. Organizations purchased the whole kit from their favorite server vendor. DAS configurations prospered but for two shortcomings; one with financial implications and the other affecting operations.

First, you'd find server farms with a large number of machines depleted of internal disk space, while the ones next to them had excess. We lacked a fair way to distribute available capacity where it was urgently required. Organizations ended up buying more disks for the exhausted systems, despite the surplus tied up in the adjacent racks.

The second problem with DAS surfaced with clustered machines, especially after server visualization made virtual machines (VMs) mobile. In clusters of VMs, multiple physical servers must access the same logical drives in order to rapidly take over for each other should one server fail or get bogged down.

SANs offer a very appealing alternative - one collection of disks, packaged in a convenient peripheral cabinet where multiple servers in a cluster can share common access. The SAN crusade stimulated huge growth across all the major independent storage hardware manufacturers including EMC, NetApp and HDS and it also spawned numerous others. Might shareholders be wondering how their fortunes will be impacted if the pendulum swings back to DAS, and SANs fall out of favor?

Such speculation is fanned by the dissatisfaction with the performance of virtualized, mission-critical apps running off disks in the SAN, which lead directly to the rising popularity of flash cards (solid state memory) installed directly on the hosts.

HOST-SIDE VIEWPOINT

The host-side flash position seems pretty compelling; much like DAS did years ago before SANs took off. The concept is simple; keep the disks close to the applications and on the same server. Don't go out over the wire to access storage for fear that network latency will slow down I/O response.

The fans of SAN argue that private host storage wastes resources and it's better to centralize assets and make them readily share-able. Those defending host-resident storage contend that they can pool those resources just fine. Introduce host software to manage the global name space so they can get to all the storage regardless of which server it's attached to. Ever wondered how? You guessed it; over the network. Oh, but what about that wire latency? They'll counter that it only impacts the unusual case when the application and its data did not happen to be co-located.

Well, how about the copies being made to ensure that data isn't lost when a server goes down? You guessed right again: the replicas are made over the network.

What conclusion can we reach? The network is not the enemy; it is our friend. We just have to use it judiciously.

Now then, with data growth skyrocketing, should organizations buy larger servers capable of housing even more disks? Why not? Servers are inexpensive, and so are the drives. Should they then move their Terabytes of SAN data back into the servers?

Please see how DataCore is addressing the above issues with its latest release: DataCore SANsymphony-V10